How to Use GSC Crawl Stats Reports to Improve Your SEO

Google is no longer crawling every page on your site equally, and that’s a problem.

In recent years, Google has become more selective about how it uses its crawl budget. That means some of your pages might not be crawled often, or at all. And if Google isn’t crawling them, it likely isn’t indexing or ranking them either.

One of the most effective ways to understand which pages Google values (and which it ignores) is by reviewing your Crawl Stats Reports inside Google Search Console.

In this guide, I’ll show you how to find pages that Google rarely crawls and explain what to do about them so that you can improve your site’s indexation, visibility, and performance.

Step 1: Find Low-Crawl Pages

To find out how often Google crawls your website, which of your indexed pages it’s paying attention to, and which it’s quietly ignoring, we’ll use the Indexed Pages Report in Google Search Console.

- Open Google Search Console and choose your property.

- Go to “Pages” under the “Indexing” section in the left-hand menu.

- In the main report, click “View data about indexed pages”.

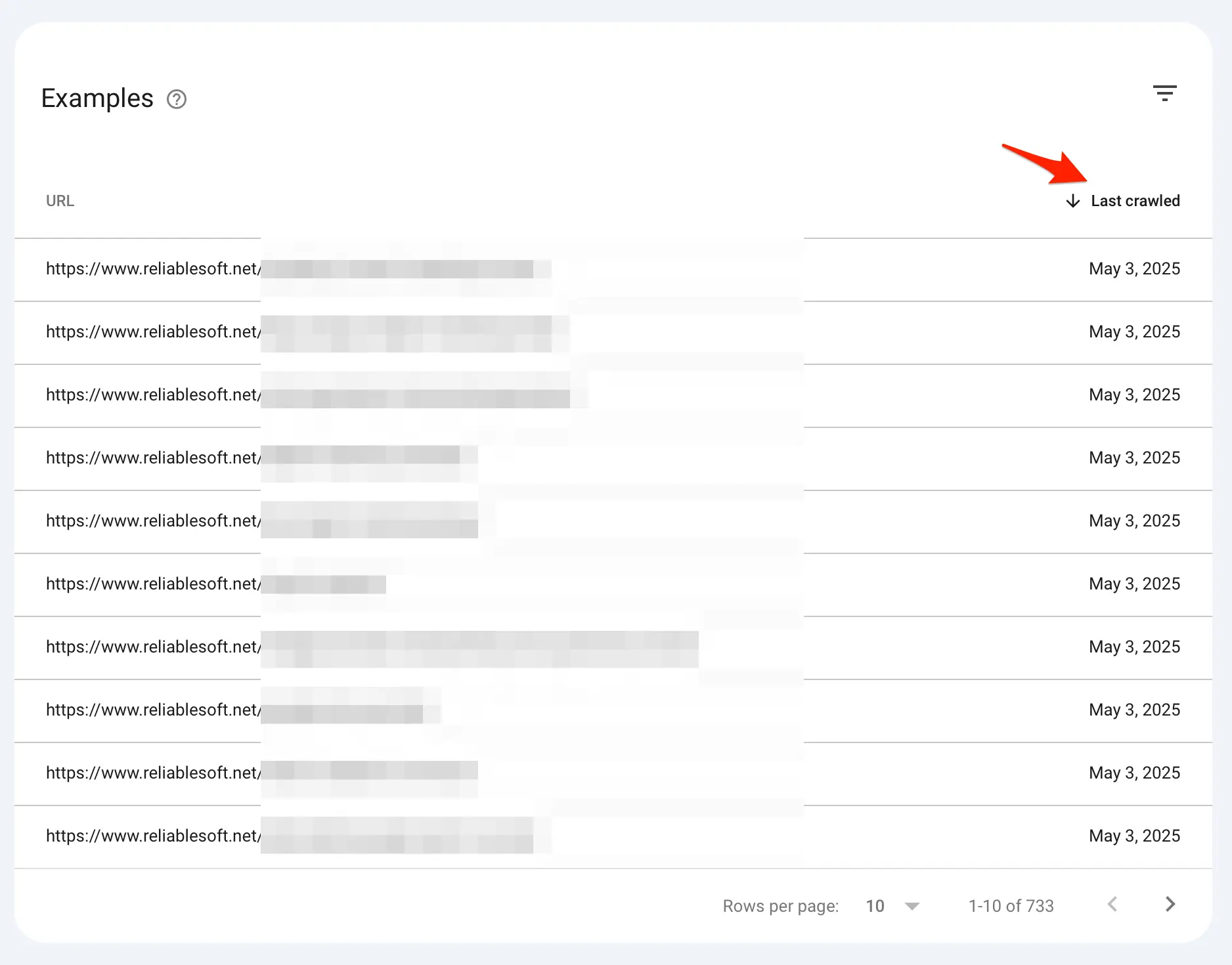

Go through the report (all the way to the last page) and look at the ‘Last Crawled Date’.

If you see pages that haven’t been crawled in a long time, say, over 60 days, it’s a signal that Google may not consider them useful, fresh, or relevant to your site’s main theme.

Look also at your site’s crawl behavior as a whole. In most cases, Google doesn’t crawl every page daily. Large, frequently updated sites may get thousands of crawls daily, while smaller or slower sites may only see a few dozen.

Step 2: Fix or Remove Low-Value Pages

Google ignoring a page doesn’t always mean it’s low quality. Sometimes, it lacks signals that show that it matters. Your job now is to either boost those signals to show Google that the page is important or remove it if it doesn’t need to be indexed.

As a general rule of thumb, you should keep pages in Google’s index only if they align with your business goals. It’s not a matter of quantity (i.e., how many pages are indexed) but a matter of quality (how many good pages you have in Google’s index).

Here’s how to approach it:

1. Improve Internal Linking

Google uses internal links to discover and prioritize content. If a page is buried deep in your site with no links pointing to it, or only linked from low-value pages, it’s easy for Google to ignore it.

Make sure under-crawled pages are linked from:

- Relevant high-traffic pages

- Your main menu (if appropriate)

- Newer blog posts

- Use meaningful anchor text that reflects the page’s topic.

2. Refresh and Improve the Content

If the page hasn’t changed in a long time (more than a year), Google may assume it’s outdated or irrelevant and thus will prioritize other pages on the same topic that are updated frequently.

What you should do is update the page content to trigger a recrawl. You can do this by:

- Adding new sections

- Rewriting the intro

- Include new visuals

- Revising the page title and meta description

3. Check Current Search Intent

Another important consideration is whether the content still aligns with current search intent. Even if a page was useful when first published, search behavior and Google preferences change.

If your content doesn’t satisfy users’ current needs, Google may downrank it, even if it’s technically accurate.

A topic that once required a how-to guide might now demand a quick checklist or a product comparison. The easiest way to understand the current search intent is to perform a search on Google, Google AI Mode, and other LLMs.

Pay close attention to the format and type of results Google shows on page one and to the responses received by LLMs. Your content should follow the same pattern and structure.

4. Check For Duplicate Content

You should also check if the page competes with another page on your site. This is called keyword cannibalization and often happens when multiple articles target the same topic or keyword.

When two or more pages are similar, they dilute each other’s authority. In this case, it’s better to:

- Consolidate them into one stronger page and redirect the weaker version

- Redirect one page to the other (without consolidation)

- Remove the duplicate pages by deleting the page or using the noindex tag

- Use canonical URLs to tell Google which is the preferred version of a page

5. Add or Update the Page in Your Sitemap

Make sure the page is included in your XML sitemap. Google uses the sitemap to discover and prioritize URLs, especially if your internal link structure is not optimized correctly.

If it’s already in the sitemap, check that the lastmod date is accurate and recent. If not, ensure the last modification date is shown in your sitemap and page content.

6. Submit the Page for Reindexing

After making changes to the page content, use the URL Inspection Tool in Search Console to ask Google to recrawl the page. This will speed up the time it takes for the Google crawler to revisit the page.

Step 3: Analyze Your Crawl Stats Reports

Once you’ve cleaned up low-value content, the next step is to understand how Google is crawling your site as a whole. This gives you a broader view of how efficiently your site is being crawled and whether crawl budget is being wasted.

To access your crawl data, go to Google Search Console and open the Crawl Stats report under Settings.

This report shows how often Googlebot visits your site, how many requests it makes daily, how your server responds, and what types of files it crawls.

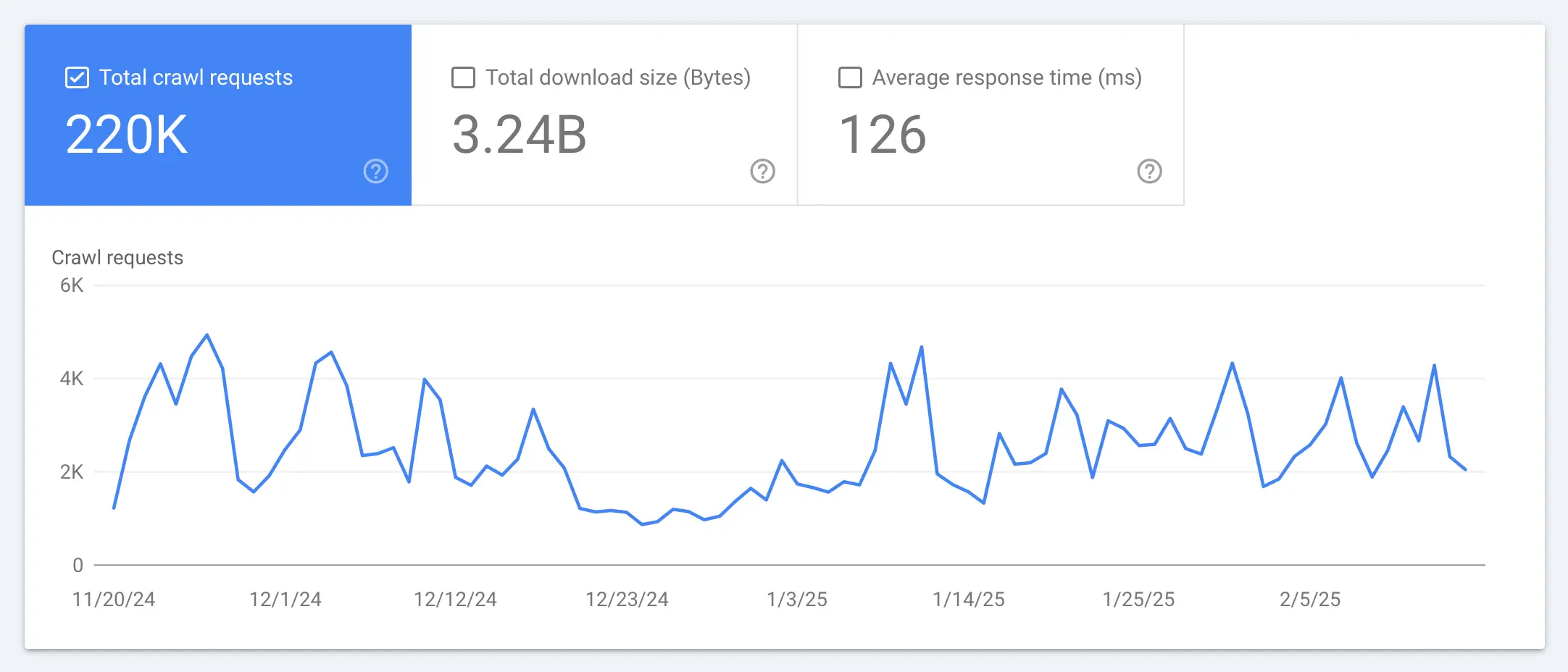

Start by looking at your total crawl requests over time. If there are large drops or unexpected spikes, it may indicate a problem.

For example, a consistent drop might suggest that Google is losing interest in your site or encountering server issues that prevent crawling.

Normally, when you follow the above steps to fix low-crawled pages, your crawl requests should increase, which is a sign that Google has considered your changes.

Also, look at crawl response times. If Googlebot consistently experiences delays when loading your pages, it will intentionally slow down how often it requests pages. As a result, your updated content may take longer to get noticed, and low-crawled pages may not be revisited.

To improve your server performance, consider switching to better hosting, applying caching techniques, or using a CDN. Faster websites improve the user experience, lead to faster indexing, and better freshness signals overall.

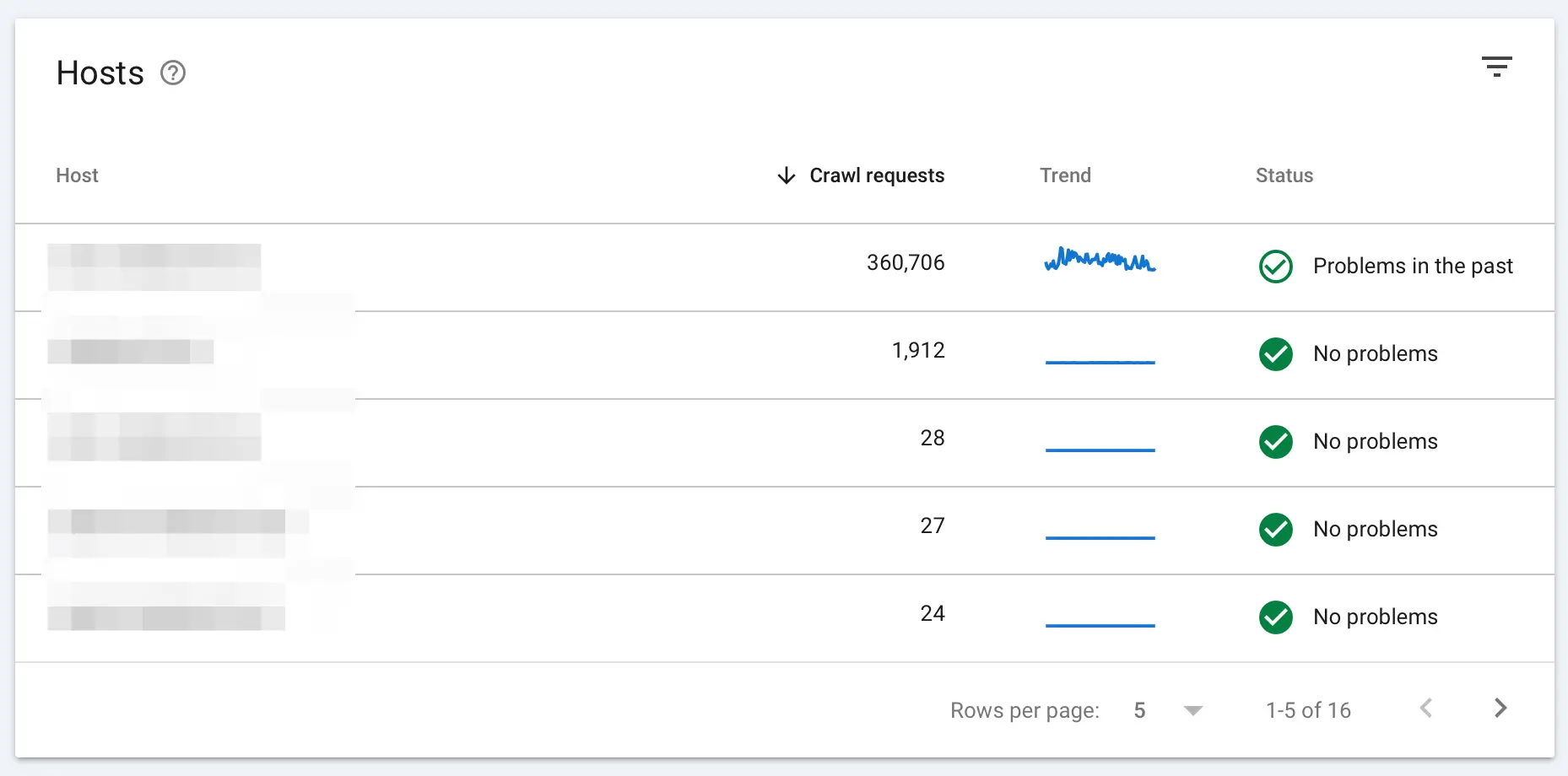

Next, look at which hosts are being crawled, particularly the status column. If problems are reported, investigate the cause and try to fix the issues.

If you see Google crawling subdomains like staging.yoursite.com or whn.domain.com that aren’t meant to be indexed, that’s a sign your crawl budget is being wasted. You’ll want to block crawling by changing your robots.txt file.

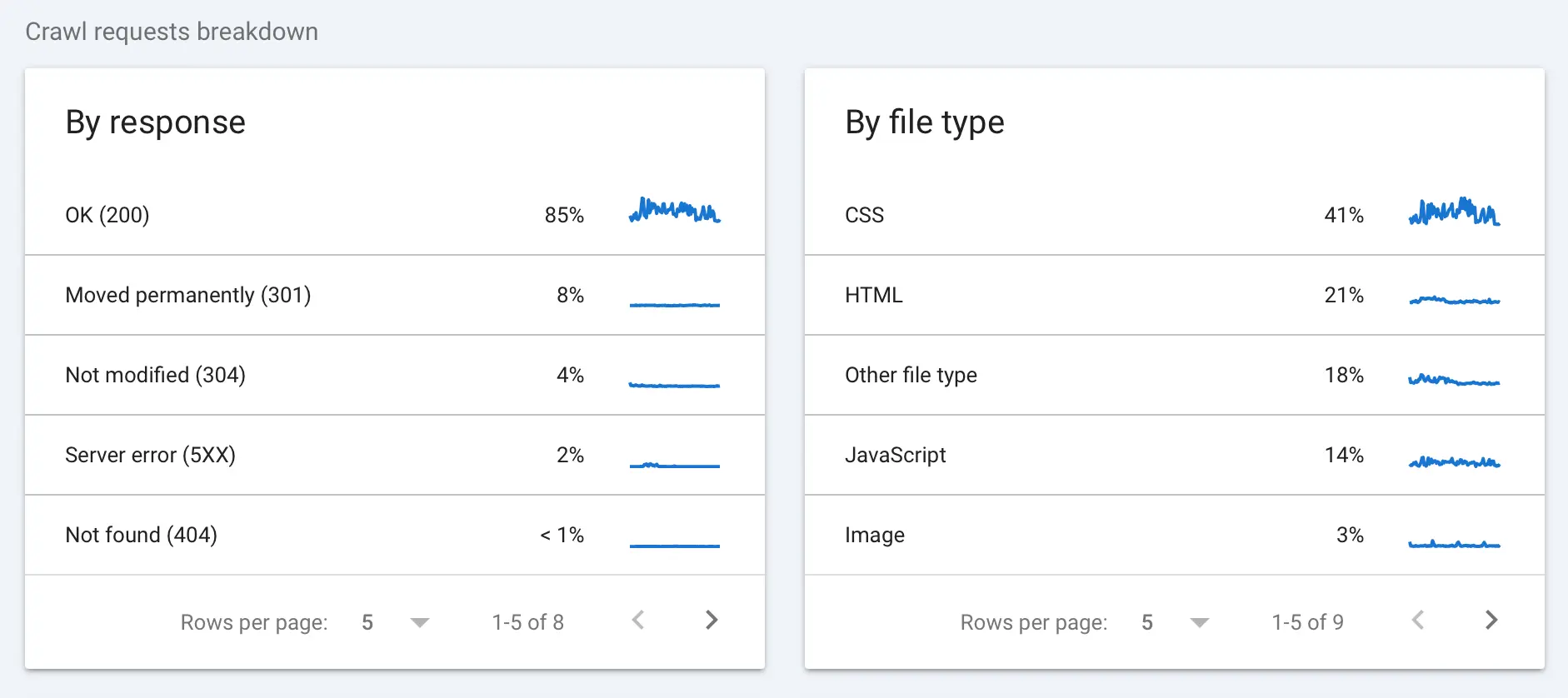

Continue your investigation by clicking on the main host name (usually your domain) and paying attention to response codes.

A high number of Server errors (5XX) or not found (404) errors means Google is finding broken links or experiencing server problems. This slows down crawling and hurts your site’s efficiency.

Step 4: Monitor Progress

Once you’ve made changes to your site, the final step is to monitor how Google responds. This is how you validate that your efforts are working in the right direction.

Start by revisiting the Page Indexing Report. Check if previously low-crawled pages are now being revisited. You should see their “Last crawled” dates updating more frequently, especially if you’ve improved internal links or refreshed the content.

In the Crawl Stats Report, look at your overall crawl volume (total crawl requests). A gradual increase in daily crawl requests can indicate that Google is allocating more resources to your site. That’s a positive signal that your changes improve how Google perceives your domain.

Keep an eye on average response times and error codes as well. If response times improve and error rates drop, Google is more likely to crawl deeper and more often.

Finally, check the Performance on Search Results Report in Search Console to see if impressions and indexed pages increase over time. While indexing doesn’t guarantee ranking, it’s a necessary first step, and if you’re seeing more content in the index, your technical SEO improvements are working.

Conclusion

You shouldn’t expect Google to crawl and index everything you publish on your website. Your job is to make it easy for Google to find, understand, and prioritize the pages that matter most.

By identifying low-crawl pages, improving internal signals, fixing technical issues, and monitoring crawl behavior, you take control of how Googlebot interacts with your site.

Use the crawl stats and indexing reports regularly to maintain a cleaner, faster, and more index-worthy website.